Yeonsang Shin

신연상

Seoul, South Korea

Hello! I'm a M.S. Student at KAIST Visual Computing Lab (VCLAB), supervised by professor Min H. Kim. I've been fortunate to work with amazing professors Bohyung Han, Sungroh Yoon, Jongho Lee, and Kangwook Lee.

I believe we are entering an era where humans must achieve precise control over AI systems. My current research focuses on Computational Imaging, where I explore how additional sensors and information, such as event cameras, can bridge the gaps in existing generative AI approaches. By leveraging richer sensory data, I aim to enhance the reliability and controllability of AI-generated outputs.

Beyond my academic work, I'm part of T.A.S.A., an independent research group made up of students, dedicated to developing mathematical frameworks for AI control and safety. Together with my awesome research partner Beomjun Kim, we're working on our foundational work: "Domain-Agnostic Scalable AI Safety Ensuring Framework". Our research explores new methodologies that enable direct, principled control over AI systems, extending and applying these core principles to emerging challenges in AI safety.

Publications

2024

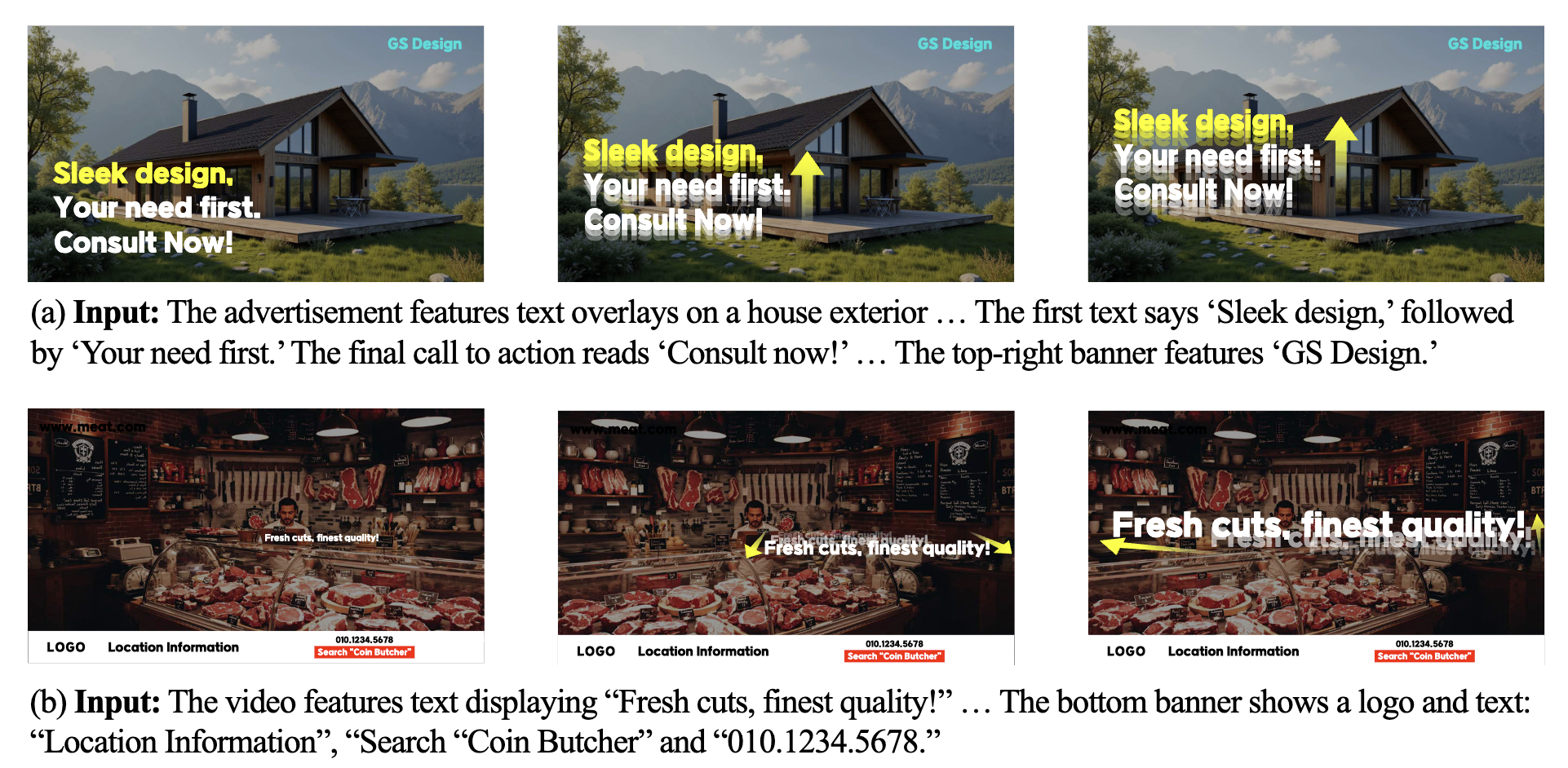

Generating Animated Layouts as Structured Text Representations

TL;DR: We’ve created VAKER, a new system that solves a major problem in AI video generation: creating videos with clear, readable text and precise control over animated elements. Unlike existing models that struggle with text rendering, VAKER treats video layouts as structured text representations and uses a three-stage process to generate visually appealing video advertisements with dynamic text animations.